Redesigning Content Moderation

Facebook’s family of apps see billions of posts daily. A portion of these posts require manual human review to come to a decision. I helped design the product experience of human content review. This work required an intimate understanding a unique set of users, the philosophical and ethical stakes at play, and an immensely complex technical and operational decision-making system. I care deeply about this work and believe it is among the most important challenges Facebook faces.

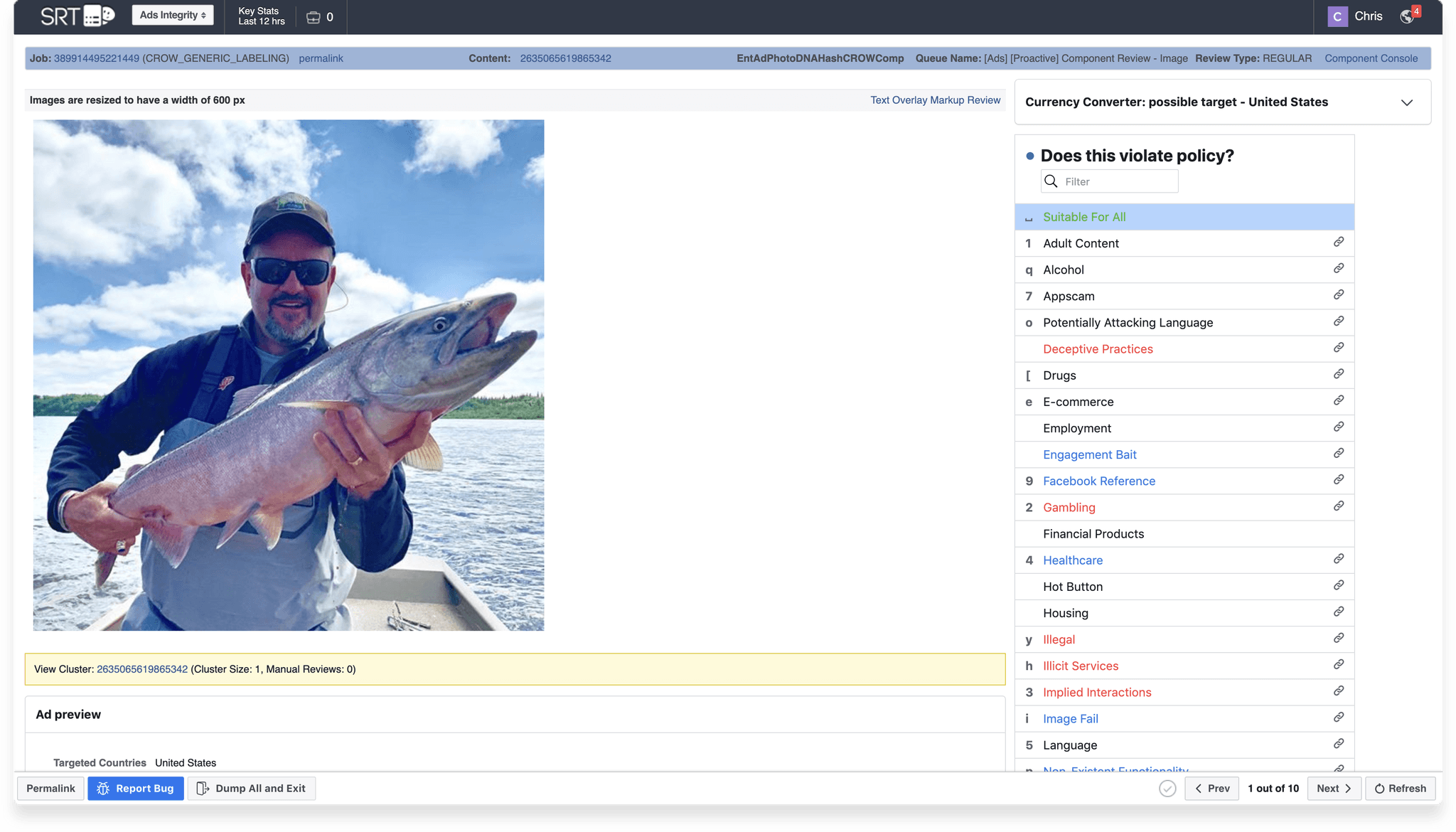

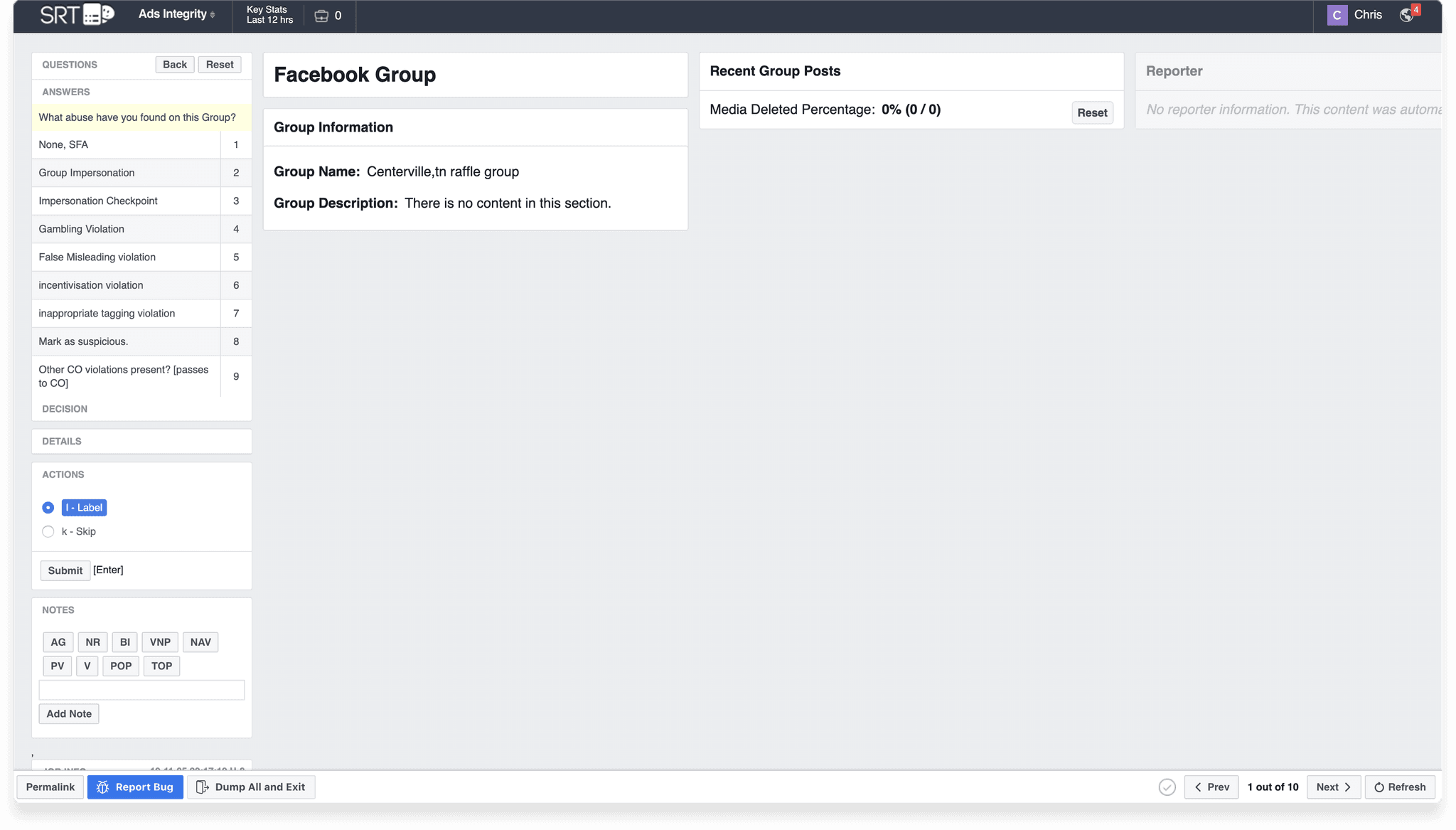

Before

When I joined, Facebook's review tooling was in disrepair. It was built, scrappily, by engineers early in Facebook's history. As the platform grew, new review types and workflows were shoe-horned in. The product was unstable, built on outdated infrastructure, and lacked any underlying design system.

North Star Vision

Our challenge was to rebuild and redesign Facebook's review tooling without adversely impacting ongoing content moderation. To do this, we crafted a vision for a single, modular review interface. We then socialized that vision and collaborated with engineering to make a plan for its implementation. It started with a single review framework:

Modular Component Library

While the framework remained the same across all types of review, we built a modular library of components, each representing different pieces of information about all of the types of content that could be shared on Facebook's family of apps: images, text posts, ads, videos, livestreams, etc.

AI Assisted Review

Now that we established a design system, we were able to explore more tightly tying human decision-making with automated decision-making. Even if our automated system couldn’t make a definite decision on content, it had potentially valuable information that we were interested in surfacing to help their efficiency, accuracy, and wellbeing.

Graphic content Surfacing likely moments of graphic violence in videos

Obfuscating sensitive content Protecting reviewers from witnessing unnecessary sensitive content

Object and figure detection Identifying relevant cultural or political figures or objects